Categories

Recent Stories

Addressing LLM03:2025 Supply Chain Vulnerabilities in LLM Apps

Jan 16, 2025 Information hub

Learn how to address LLM03:2025 Supply Chain vulnerabilities in Large Language Model applications. Discover key risks, mitigation strategies, and best practices for securing AI systems.

Addressing LLM02:2025 Sensitive Information Disclosure Risks

Jan 16, 2025 Information hub

Learn how to address LLM02:2025 Sensitive Information Disclosure, a critical vulnerability in large language models, and protect sensitive data effectively.

Strategies to Mitigate LLM01:2025 Prompt Injection Risks

Jan 16, 2025 Information hub

Learn effective strategies to mitigate LLM01:2025 Prompt Injection risks and secure your large language model applications against evolving threats.

A Comprehensive Guide to OWASP Top 10 LLM Applications 2025

Jan 15, 2025 Information hub

Dive into the OWASP Top 10 LLM Applications 2025 to understand key vulnerabilities, trends, and mitigation strategies for secure AI systems.

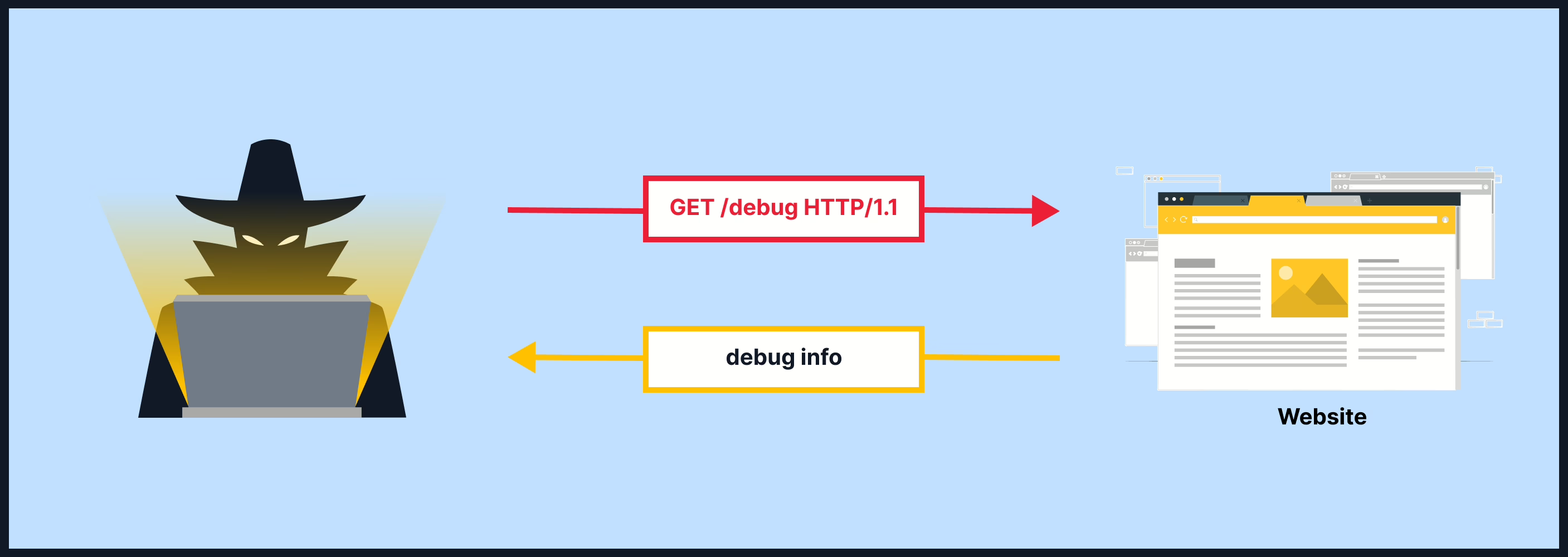

Understanding Information Disclosure Vulnerabilities: Risks & Fixes

Dec 11, 2024 Information hub

Explore what information disclosure vulnerabilities are, their risks, real-world examples, and how to prevent them with secure coding and system practices.

Cookies vs Supercookies: The Hidden Layers of Online Tracking

Dec 5, 2024 Information hub

Discover the differences between cookies vs supercookies, their privacy implications, and how to protect your data while browsing the web securely.

Product Security Best Practices for Safer Products

Dec 3, 2024 Information hub

Explore product security best practices to protect data, maintain trust, and prevent risks throughout your product's lifecycle, from design to decommissioning.

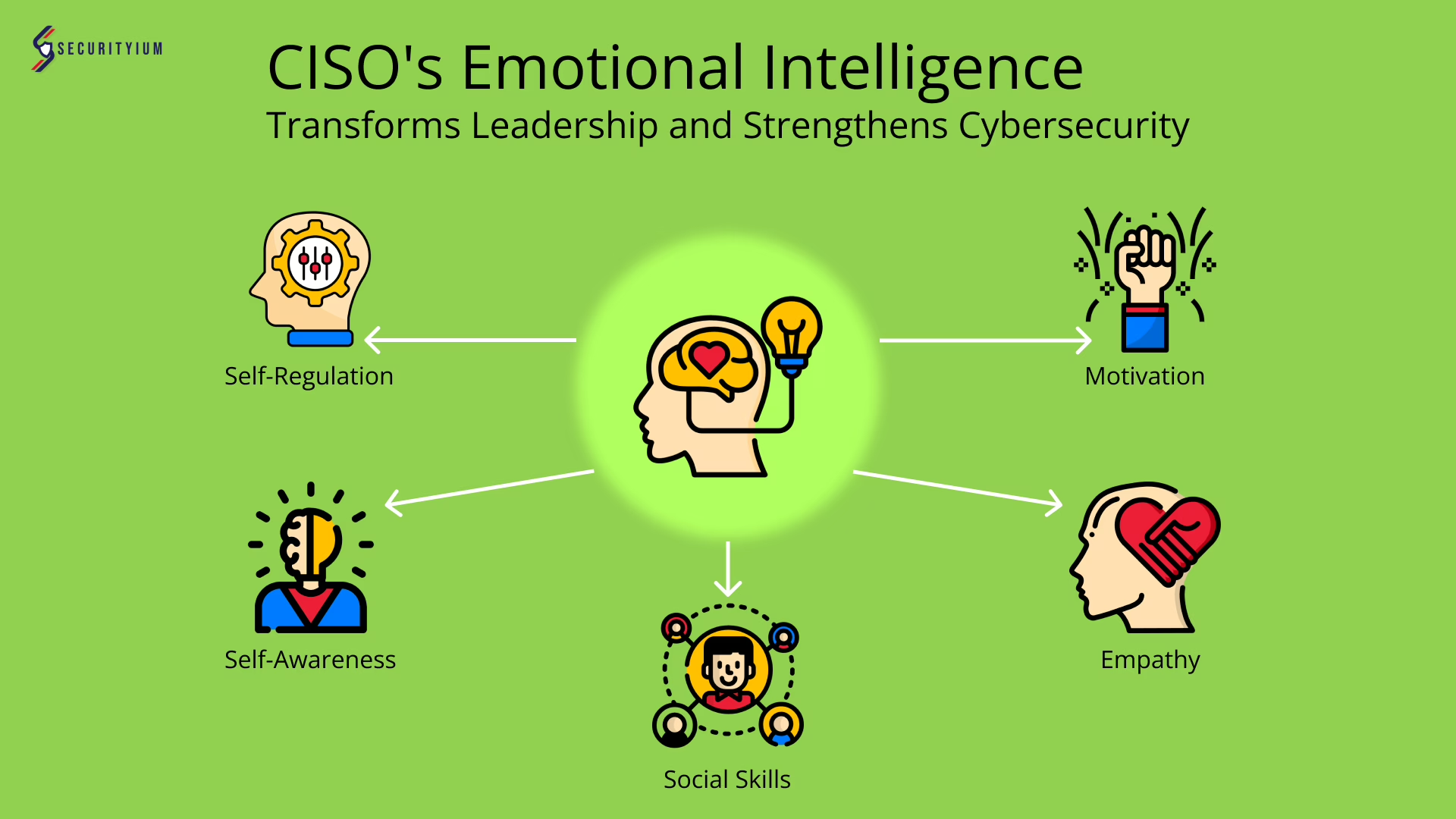

How CISO’s Emotional Intelligence Transforms Leadership and Strengthens Cybersecurity

Dec 2, 2024 Information hub

Learn how CISO's emotional intelligence boosts leadership, enhances communication, and builds a strong security culture in evolving cyber landscapes.