Categories

Recent Stories

Pen Test vs Vulnerability Scan: Understanding Their Roles in Cybersecurity

Oct 22, 2024 Information hub

Discover the key differences between pen test vs vulnerability scan. Learn how each method helps organizations identify vulnerabilities and enhance their cybersecurity defenses.

Understanding White Box and Black Box Testing for Better Software Quality

Oct 22, 2024 Information hub

Discover the benefits of white box and black box testing in software development. Learn how both approaches help in improving functionality, security, and user experience.

White Box and Black Box Testing: Understanding Key Differences and Benefits

Oct 22, 2024 Information hub

Discover how white box and black box testing work, their benefits, and why combining them helps you deliver secure, high-quality software in today's digital world.

Black Box vs White Box Testing: Understanding the Differences and Best Practices

Oct 22, 2024 Information hub

Discover the essential differences between black box vs white box testing. Learn how to use each method effectively for secure, reliable, and high-quality software projects.

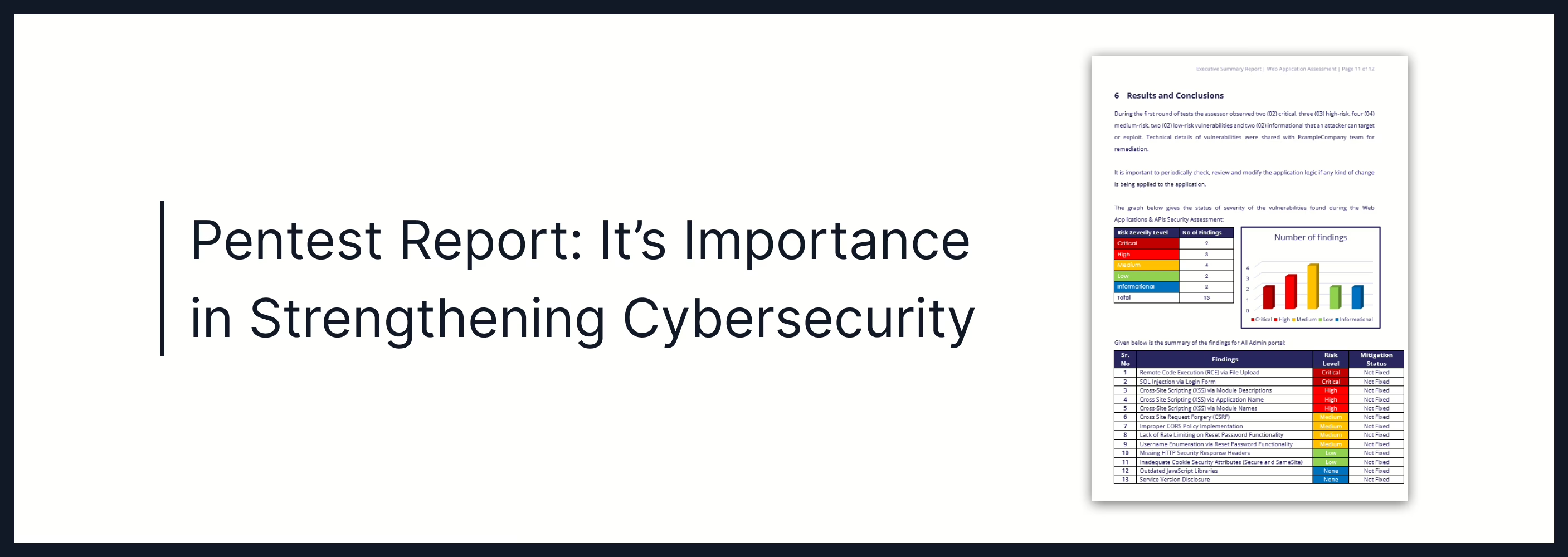

Pentest Report: It’s Importance in Strengthening Cybersecurity

Oct 22, 2024 Information hub

Discover how a pentest report outlines security risks and provides actionable steps to fix vulnerabilities. Learn how it strengthens cybersecurity and ensures compliance.

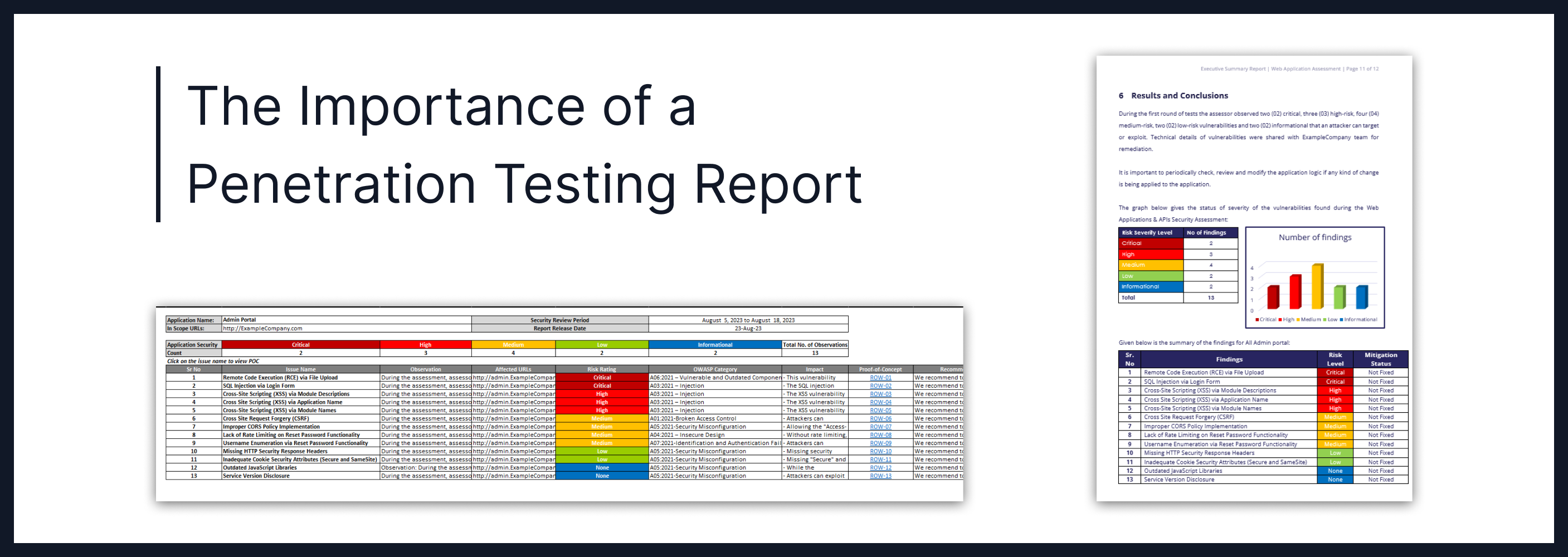

The Importance of a Penetration Testing Report for Stronger Cybersecurity

Oct 22, 2024 Information hub

A penetration testing report helps businesses find and fix cybersecurity risks. Discover how this report provides clear steps to protect systems from cyber threats.

Pentest Methodology: A Step-by-Step Approach to Effective Cybersecurity Testing

Oct 22, 2024 Information hub

Discover how a pentest methodology provides a structured way to find vulnerabilities, ensure cybersecurity, and comply with industry standards through detailed testing steps.

Comprehensive Methodology for Penetration Testing to Strengthen Cybersecurity

Oct 22, 2024 Information hub

Explore the methodology for penetration testing and how it helps find security flaws. Stay compliant, improve defenses, and protect your organization from cyberattacks.