Categories

Recent Stories

What is SAST: A Guide to Secure Your Applications

Nov 5, 2024 Information hub

Discover what SAST is and its role in securing code from vulnerabilities. Our guide covers benefits, real-world examples, and integration tips for effective app security.

SAST Meaning: Secure Your Code with Static Security Testing

Nov 5, 2024 Information hub

Discover the meaning of SAST, a method for finding security issues in code early in development. See why SAST is key for catching vulnerabilities, enhancing code quality, and ensuring compliance.

Best Mobile Application Penetration Testing Tools in 2025

Nov 5, 2024 Information hub

Learn about essential mobile application penetration testing tools, their features, and best practices to secure your app, protect user data, and stay compliant with regulations.

Essential Security Testing Tools for Identifying and Reducing Cyber Risks

Nov 5, 2024 Information hub

Explore various security testing tools like SAST, DAST, and IAST, and learn how they help identify cyber risks, enhance compliance, and strengthen cybersecurity defenses.

The Importance of Application Security Testing

Nov 5, 2024 Information hub

Discover the importance of application security testing to secure data, avoid breaches, and comply with regulations. Learn key testing types and emerging trends.

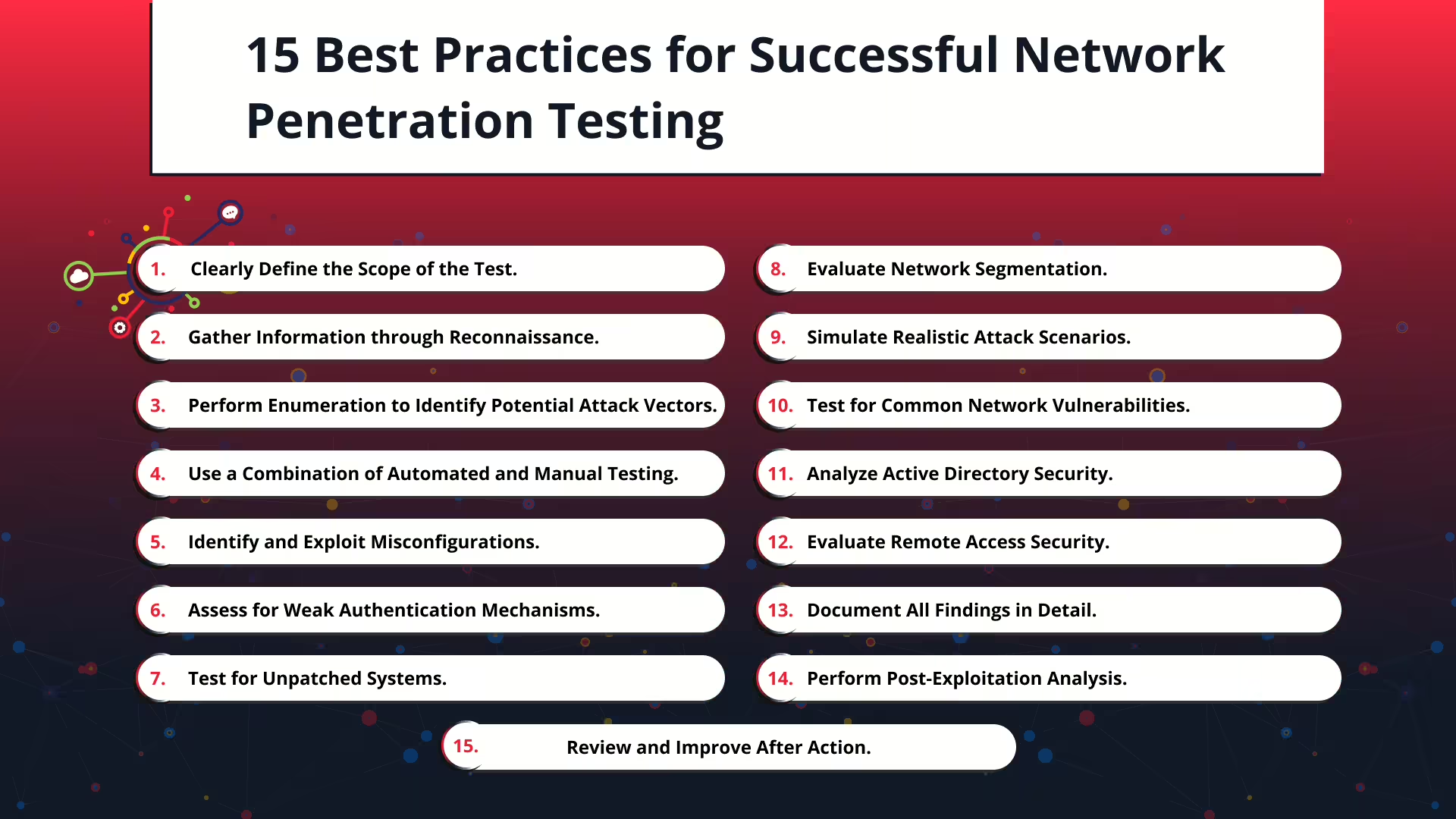

15 Best Practices for Successful Network Penetration Testing

Nov 5, 2024 Information hub

Explore 15 essential network penetration testing practices to identify and fix vulnerabilities, strengthen defenses, and protect your network from cyber threats.

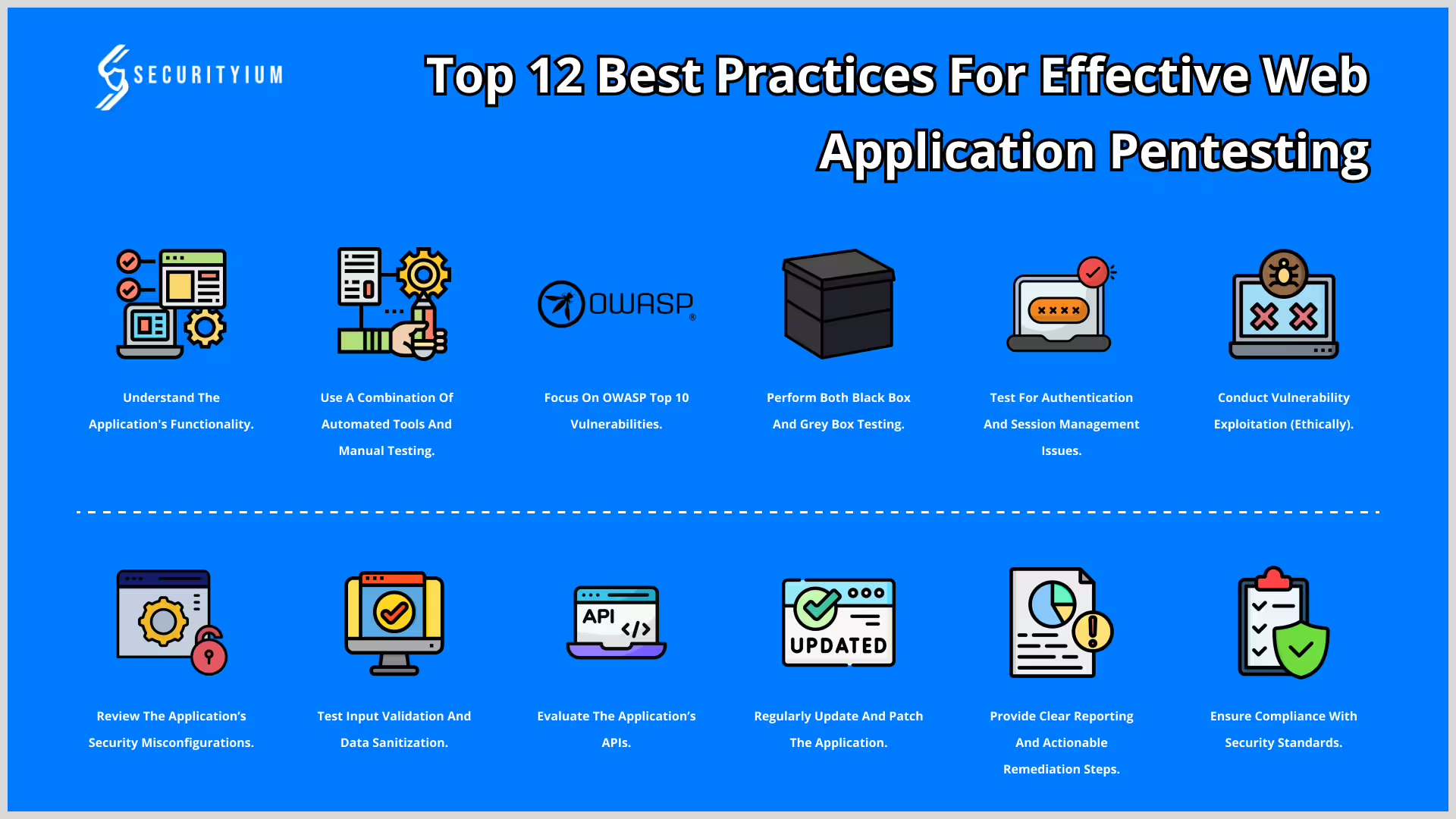

Top 12 Best Practices for Effective Web Application Pentesting

Oct 29, 2024 Information hub

Discover the top 12 best practices for web application pentesting to strengthen security, identify vulnerabilities, and protect your app from cyberattacks.

Understanding the Open Web Application Security Project (OWASP) Top Ten for Web App Security

Oct 25, 2024 Technical Write ups

Learn about the Open Web Application Security Project (OWASP) Top Ten, a key guide for tackling the most critical web app vulnerabilities. Follow best practices to reduce security risks and ensure data protection.