Jan 15, 2025 Information hub

A Comprehensive Guide to OWASP Top 10 LLM Applications 2025

Large Language Models (LLMs) have become indispensable in transforming industries, offering unparalleled advancements in automation, customer engagement, and decision-making processes. From streamlining operations in finance to enabling personalized healthcare, the adoption of LLMs is widespread. Yet, as organizations leverage these models, they must also navigate a growing array of security risks, making the OWASP Top 10 LLM Applications 2025 a vital resource.

The significance of this framework lies in its targeted focus on the unique vulnerabilities posed by LLMs. Unlike traditional software systems, LLMs operate on vast datasets, using probabilistic patterns to generate responses. This complexity introduces risks, from data leaks to malicious prompt manipulations. For instance, a customer service chatbot could inadvertently expose sensitive user information due to improper output handling.

As AI technologies continue to evolve, their integration across industries grows more sophisticated. However, this sophistication comes with an expanded attack surface. Hackers can exploit prompt injection vulnerabilities or poison datasets, leading to biased outputs or system compromises. Without a comprehensive understanding of these threats, organizations risk not only financial losses but also reputational damage.

Statistics emphasize the urgency of addressing these challenges. According to Gartner, by 2026, over 60% of AI applications will face security incidents related to LLMs, highlighting the critical need for robust mitigation strategies. Additionally, OpenAI reported that prompt injection attacks are among the top three threats affecting GPT-based applications.

The OWASP Top 10 LLM Applications 2025 is designed to provide developers, security experts, and businesses with actionable insights to secure their AI systems. It covers everything from common vulnerabilities, such as system prompt leakage, to emerging risks like unbounded consumption. By proactively addressing these risks, organizations can build safer, more reliable LLM-powered solutions.

In this blog, we’ll explore the OWASP framework in depth, offering practical examples, real-world scenarios, and actionable strategies to help you navigate the evolving landscape of LLM security.

Understanding OWASP Top 10 LLM Applications 2025

The OWASP Top 10 LLM Applications 2025 is a globally recognized framework that identifies the most critical security risks in LLM-powered systems. It is tailored specifically for applications utilizing LLMs, offering detailed insights into their unique vulnerabilities and solutions.

Why It’s Relevant Today

With LLMs powering diverse applications, from automated legal document drafting to personalized marketing campaigns, their security is paramount. These models are often trained on vast, unverified datasets, which can introduce biases, vulnerabilities, or sensitive information into their outputs.

The framework not only categorizes risks but also highlights mitigation strategies, ensuring that developers can design resilient systems. It underscores the importance of secure development practices, regular audits, and adopting a zero-trust approach when integrating LLMs.

Key Highlights of the 2025 Version

- New Additions: Risks like system prompt leakage and vector embedding weaknesses address modern challenges in LLM deployments.

- Expanded Coverage: Topics such as unbounded consumption and excessive agency reflect the evolving complexities of LLM applications.

- Community Collaboration: The 2025 list was developed through extensive feedback from global security experts, ensuring relevance and applicability.

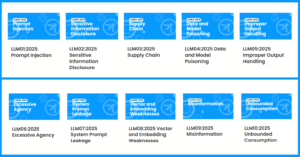

OWASP Top 10 LLM Applications 2025 Risks

1. Prompt Injection Vulnerabilities

Prompt injection is a significant security risk in LLM applications. It occurs when malicious actors manipulate input prompts to alter the behavior of the LLM, bypass safety mechanisms, or embed harmful instructions. These vulnerabilities arise because LLMs are designed to process inputs without distinguishing between legitimate and harmful prompts, making them susceptible to exploitation. The first risk of OWASP Top 10 LLM Applications 2025.

Prompt injection can take two forms:

- Direct Prompt Injection: The attacker embeds malicious commands directly into user input, causing the LLM to generate unauthorized outputs or execute harmful actions.

- Indirect Prompt Injection: Malicious instructions are hidden in external sources like documents or websites. When these are processed by the LLM, they alter its behavior without any visible indicators to the user.

The complexity of multimodal systems, where text interacts with images or audio, further increases the risk. For instance, hidden instructions in an image accompanying text can trigger unintended responses from an LLM.

Why It’s Critical

Prompt injection can lead to:

- Unauthorized Access: Attackers can gain access to sensitive systems or data by tricking the LLM into bypassing authentication checks.

- Content Manipulation: Malicious actors may influence outputs, resulting in biased or false information.

- System Compromise: The model may perform actions beyond its intended scope, such as executing commands in connected systems.

Examples

- Direct Injection: An attacker embeds commands within user input, bypassing safety protocols.

- Indirect Injection: External data sources with hidden malicious instructions manipulate LLM outputs.

Mitigation Strategies

- Enforce strict input validation protocols.

- Conduct adversarial testing to simulate attacks.

- Define clear output formats and validate them against predefined rules.

2. Sensitive Information Disclosure

LLMs are prone to inadvertently revealing sensitive information. This issue arises because the models are trained on vast datasets, which might include confidential data. When prompted, the LLM could recall and expose this information, leading to significant privacy violations. The second risk of OWASP Top 10 LLM Applications 2025.

For example, a chatbot trained on customer service data might reveal a user’s financial details when queried. Similarly, proprietary algorithms or confidential training data can leak through outputs.

Why It’s Critical

Sensitive information disclosure can result in:

- Privacy Breaches: Exposing personal data like PII or financial details violates regulations such as GDPR and CCPA.

- Intellectual Property Loss: Proprietary algorithms or datasets might be disclosed, undermining competitive advantages.

- Reputational Damage: Organizations risk losing customer trust if their LLMs are found to be insecure.

Examples

- Chatbots exposing PII during conversations.

- Proprietary algorithms leaked through poorly designed outputs.

Solutions

- Implement differential privacy techniques.

- Regularly audit datasets for sensitive content.

- Use encryption for data storage and retrieval.

3. Supply Chain Vulnerabilities

LLM applications often rely on third-party tools, APIs, and pre-trained models, creating a supply chain risk. Malicious actors can exploit vulnerabilities in these dependencies to compromise the application. For instance, attackers might inject backdoors into pre-trained models or manipulate APIs to execute unauthorized actions.

The rise of collaborative development platforms, such as Hugging Face, has also increased the risk of supply chain attacks. Fine-tuning methods like Low-Rank Adaptation (LoRA) add another layer of complexity, as compromised adapters can introduce vulnerabilities. The third risk of OWASP Top 10 LLM Applications 2025.

Why It’s Critical

Supply chain vulnerabilities can lead to:

- System Compromise: A malicious dependency can act as a backdoor, allowing attackers to control the application.

- Data Breaches: Vulnerable APIs or models may expose sensitive user or organizational data.

- Service Disruption: Exploiting outdated components can lead to operational failures.

Examples

- Malicious pre-trained models downloaded from public repositories.

- Exploitation of outdated APIs.

Mitigation

- Vet all third-party components rigorously.

- Maintain a comprehensive SBOM.

- Regularly patch and update dependencies.

4. Data and Model Poisoning

Data and model poisoning involves the deliberate manipulation of training datasets or model parameters to compromise the integrity of LLM outputs. Attackers may introduce biases, harmful behaviors, or backdoors during the training phase.

This type of attack is particularly insidious because it affects the core functionality of the model. Poisoned data can subtly alter outputs, making detection difficult until significant harm has occurred. The fourth risk of OWASP Top 10 LLM Applications 2025.

Why It’s Critical

Data and model poisoning can result in:

- Biased Outputs: Models may favor certain viewpoints or decisions, undermining fairness and objectivity.

- Backdoors: Attackers can trigger specific responses by using crafted inputs, compromising security.

- Reduced Trust: Users may lose confidence in the model’s reliability and accuracy.

Examples

- Poisoned datasets altering model behavior to favor specific outputs.

- Fine-tuned models embedding backdoors for unauthorized access.

Mitigation Strategies

- Implement tamper-proof data pipelines.

- Conduct rigorous anomaly detection during training.

- Use red-teaming exercises to simulate and address poisoning attacks.

5. Improper Output Handling

Improper output handling occurs when LLM-generated responses are not validated or sanitized before being used by downstream systems. This can lead to unintended actions, such as executing harmful commands or exposing sensitive data. The fifth risk of OWASP Top 10 LLM Applications 2025.

For example, an LLM might generate SQL queries that, if executed without validation, could lead to database breaches. Similarly, unsanitized outputs might contain code that could be exploited for cross-site scripting (XSS) attacks.

Why It’s Critical

Improper output handling can lead to:

- Security Breaches: Outputs like unsanitized code can introduce vulnerabilities in connected systems.

- Misinformation: Unvalidated responses may propagate incorrect or harmful content.

- Operational Failures: Incorrect outputs can disrupt system workflows or cause unintended actions.

Examples

- LLM-generated SQL queries executed without validation, leading to SQL injection.

- Chatbots returning unfiltered, sensitive data in response to user queries.

Mitigation Strategies

- Apply semantic filtering and context-aware encoding.

- Validate and sanitize all outputs before processing.

- Use strict access controls to prevent unintended actions.

6. Excessive Agency Risks

Excessive agency arises when LLMs are granted more autonomy than necessary, enabling them to perform actions without adequate oversight. This can lead to unintended consequences, such as unauthorized transactions or changes to system configurations. The sixth risk of OWASP Top 10 LLM Applications 2025.

For instance, an LLM integrated into a financial system might autonomously approve large transactions based on ambiguous prompts, bypassing critical checks.

Why It’s Critical

Excessive agency can lead to:

- Unauthorized Actions: Models might perform tasks beyond their intended scope.

- System Instability: Autonomous decisions can disrupt operations or cause financial losses.

- Increased Risk Exposure: Granting unnecessary permissions creates additional attack surfaces.

Examples

- Chatbots autonomously initiating financial transactions without user approval.

- LLMs altering system configurations based on ambiguous prompts.

Mitigation Strategies

- Restrict LLM permissions to essential functions.

- Implement human-in-the-loop mechanisms for critical decisions.

- Monitor LLM activities to identify and mitigate excessive actions.

7. System Prompt Leakage

System prompts guide LLM behavior but may inadvertently contain sensitive information, such as access credentials or application rules. If exposed, these prompts can be exploited by attackers to bypass security measures or gain unauthorized access. The seventh risk of OWASP Top 10 LLM Applications 2025.

For example, a leaked prompt might reveal that a chatbot restricts transactions above a certain limit, enabling attackers to exploit this knowledge.

Why It’s Critical

System prompt leakage can lead to:

- Unauthorized Access: Attackers can manipulate the application using exposed prompts.

- Compromised Security: Sensitive information in prompts can be exploited to bypass safeguards.

- Loss of Control: Knowledge of system prompts enables attackers to predict and manipulate behavior.

Examples

- Attackers extracting system prompts containing API keys or database credentials.

- Leaked prompts exposing application rules and logic.

Mitigation Strategies

- Avoid embedding sensitive information in system prompts.

- Use external systems for critical security controls.

- Regularly audit and sanitize prompts to ensure compliance.

8. Vector and Embedding Weaknesses

Vectors and embeddings are essential for data retrieval and contextual responses in LLM applications. However, these mechanisms can be exploited through unauthorized access, poisoning, or inversion attacks, compromising data integrity.

Embedding inversion attacks, for instance, allow attackers to reconstruct sensitive information from vector data, leading to privacy breaches. The eighth risk of OWASP Top 10 LLM Applications 2025.

Why It’s Critical

Vector and embedding weaknesses can result in:

- Data Leaks: Unauthorized access to embedding databases exposes sensitive information.

- Manipulated Outputs: Poisoned embeddings can alter model behavior.

- Legal Risks: Misuse of copyrighted material during embedding can lead to compliance issues.

Examples

- Unauthorized access to embedding databases containing proprietary data.

- Data poisoning attacks targeting vector stores.

Mitigation Strategies

- Implement fine-grained access controls for vector stores.

- Encrypt embeddings and monitor their usage.

- Validate data sources to prevent poisoning.

9. Misinformation Propagation

Misinformation is a prevalent issue in LLMs, stemming from hallucinations, biases, or gaps in training data. These models might generate plausible-sounding but incorrect information, misleading users. The ninth risk of OWASP Top 10 LLM Applications 2025.

For example, an LLM might fabricate legal precedents or provide inaccurate medical advice, leading to harmful decisions.

Why It’s Critical

Misinformation propagation can lead to:

- Eroded Trust: Users may lose confidence in the system.

- Harmful Outcomes: Incorrect advice can result in financial, legal, or health-related repercussions.

- Reputational Damage: Organizations using LLMs may face public backlash or lawsuits.

Examples

- Healthcare chatbots providing inaccurate medical advice.

- LLMs fabricating references or legal cases.

Mitigation Strategies

- Train models with verified and reliable datasets.

- Use Retrieval-Augmented Generation (RAG) to ground outputs in factual information.

- Implement cross-verification processes to ensure accuracy.

10. Unbounded Consumption Risks

Unbounded consumption refers to excessive resource usage by LLM applications, often triggered by malicious or unintended inputs. This can result in financial losses, service disruptions, or even denial-of-service (DoS) attacks. The tenth risk of OWASP Top 10 LLM Applications 2025.

For example, attackers might overload an LLM API with high-volume queries, consuming computational resources and rendering the system unavailable.

Why It’s Critical

Unbounded consumption can result in:

- Operational Failures: Overloaded systems may crash or become unresponsive.

- Financial Losses: Excessive usage increases costs, particularly in pay-per-use models.

- Service Disruption: Legitimate users may be unable to access the system.

Examples

- Attackers sending high-volume queries to overload LLM APIs.

- Malicious inputs triggering resource-intensive processes.

Mitigation Strategies

- Apply rate-limiting and user quotas.

- Optimize LLM configurations for efficiency.

- Monitor resource usage and detect anomalies in real-time.

Current Trends and Future Developments

The future of LLM security is shaped by the growing adoption of AI across industries. Key trends include:

- Privacy-Preserving Techniques

Technologies like federated learning and homomorphic encryption are gaining traction. These methods ensure data privacy while enabling effective model training. - Resilient RAG Systems

Retrieval-Augmented Generation (RAG) is being refined to minimize risks associated with vector embeddings and data poisoning. - Regulatory Developments

Governments worldwide are introducing stricter regulations to govern AI usage, emphasizing transparency and accountability. - Collaborative Security Efforts

Organizations are increasingly collaborating with cybersecurity experts to address LLM-specific risks.

Benefits of Addressing OWASP Top 10 for LLM

Implementing the OWASP Top 10 LLM Applications 2025 framework offers numerous advantages that bolster the security and reliability of AI systems:

- Enhanced Data Security: By addressing vulnerabilities like sensitive information disclosure and system prompt leakage, organizations can protect user data and maintain compliance with privacy regulations.

- Increased Trust: Proactively managing risks such as misinformation and improper output handling builds confidence among users, partners, and stakeholders.

- Operational Resilience: Mitigating risks like unbounded consumption and excessive agency ensures that systems remain functional and efficient, even under duress.

- Regulatory Compliance: Adhering to best practices outlined in the framework helps organizations meet global standards like GDPR, CCPA, and ISO 27001.

- Cost Efficiency: Preventing resource misuse and optimizing system performance reduce operational costs, especially in cloud-based environments.

- Reputational Protection: Minimizing risks associated with data leaks, biases, or misinformation helps safeguard an organization’s reputation.

- Future-Proofing: Regularly updating practices based on OWASP guidance prepares organizations for evolving threats in AI and machine learning landscapes.

- Competitive Advantage: Secure and reliable AI systems differentiate businesses in a competitive market, attracting customers who prioritize data safety.

- Innovation Enablement: With risks managed, teams can focus on leveraging LLMs for innovative applications without fear of security breaches.

- Collaboration Opportunities: Adopting global best practices facilitates partnerships with other organizations committed to secure AI development.

Conclusion

The OWASP Top 10 LLM Applications 2025 provides a comprehensive roadmap for navigating the complexities of LLM security. As LLMs continue to transform industries, addressing vulnerabilities like prompt injection, data poisoning, and misinformation becomes increasingly critical.

Proactive measures such as rigorous input validation, secure data handling, and robust monitoring systems not only protect organizations from financial and reputational harm but also enable them to leverage the full potential of AI technologies. The evolving threat landscape requires ongoing vigilance, collaboration, and innovation to stay ahead of adversaries.

By implementing the OWASP framework, organizations can ensure their AI systems are not just effective but also secure and trustworthy. The integration of these practices builds resilience, fosters trust, and positions businesses as leaders in the ethical and responsible use of AI.

Key Takeaways

- The OWASP Top 10 LLM Applications 2025 highlights critical risks, including prompt injection, data poisoning, and excessive agency.

- Proactively addressing these vulnerabilities enhances security, trust, and operational efficiency.

- Adopting this framework enables organizations to innovate responsibly, ensuring their AI systems are robust, compliant, and future-ready.

Top 5 FAQs

What is the OWASP Top 10 LLM Applications 2025?

The OWASP Top 10 LLM Applications 2025 is a security framework identifying the most critical risks in large language model (LLM) applications. It addresses vulnerabilities unique to LLMs, such as prompt injection, data poisoning, and system prompt leakage, and provides strategies to mitigate these risks effectively.

Why is addressing the OWASP Top 10 LLM risks important?

Addressing these risks ensures the security, reliability, and ethical use of LLM-powered systems. It helps organizations prevent data breaches, comply with regulations like GDPR, and maintain user trust by safeguarding against vulnerabilities like sensitive information disclosure and misinformation propagation.

What are some real-world examples of LLM vulnerabilities?

Real-world examples include:

- Chatbots exposing personal user information due to improper output handling.

- LLMs generating biased or false information due to poisoned training datasets.

- Attackers exploiting API vulnerabilities in LLM applications, causing service disruptions.

How can organizations mitigate prompt injection vulnerabilities in LLMs?

Organizations can mitigate prompt injection risks by:

- Implementing strict input validation protocols.

- Conducting adversarial testing to identify potential attack vectors.

- Using context-aware filtering mechanisms to ensure only safe prompts are processed.

What are the key trends shaping the future of LLM security?

Key trends include:

- The adoption of privacy-preserving techniques like federated learning.

- Regulatory developments emphasizing AI transparency and accountability.

- Advances in resilient Retrieval-Augmented Generation (RAG) systems to improve output accuracy.